What is Docker? Why it was created? What is the difference between a Docker image and a container? Why are there so many Docker tools? Are they all needed? In this article, I describe the whole story from the very beginning... And it all starts with installing a Tomcat on a physical server.

Evolution from physical servers to Docker containers

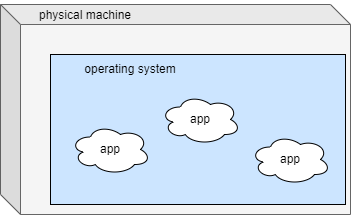

In a traditional deployment model, a system administrator installed an operating system on a physical server. Then, he installed all necessary software, tools, and packages like JDK, Apache Tomcat, Python libraries, .NET Framework, Oracle client, and SQL Server. Whatever was needed for hosting and running a particular business application. Of course, each component must've been installed in a particular version to ensure compatibility and properly configured. Later, when small services architecture became popular, companies started building many small services instead of huge monolithic applications. Naturally, a single physical server could host several of them. But to make it possible, they had to have similar software version requirements, at least in some cases. Usually, you don't want to install different .NET Framework versions on the same machine. You can, but it can cause complications.

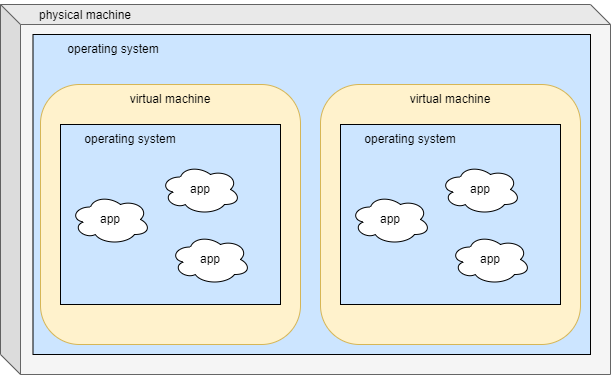

A step forward was going to virtualization. Instead of deploying business applications on a physical machine, the possibility of sharing the same physical hardware between multiple virtual machines. They were still well isolated from each other, they could have different operating systems installed, but in the end, they utilized the same hardware. It was hugely beneficial in the era of small services. Imagine a simple example - you have a powerful machine with 256 GB of RAM and you have to deploy 20 small services (miniservices) requiring only 8 GB of RAM each. If these services have different software requirements, deploying them on a single host might be tricky. Even if you succeed, restarting the operating system will temporarily shut down all services. It is much better to group the services based on their needs, e.g., into 4 groups. Then create 4 virtual machines (VMs) on that single physical server. Assign a portion of RAM to each VM. Every virtual machine will remain independent to some degree - each one may have a different operating system, software versions installed, etc. You may restart a single VM without affecting any other.

When microservices became popular, virtualization happened to be not good enough. In the miniservices era, a sample single monolithic business application was replaced by, let's say, 5 miniservices. But the microservices concept goes further, a single microservice should have a single responsibility. Hence, these 5 miniservices are broken down into for example 20 or 50 microservices. These growing numbers slowly become difficult to deploy and manage in just a virtualized environment. We needed a lighter and more manageable solution.

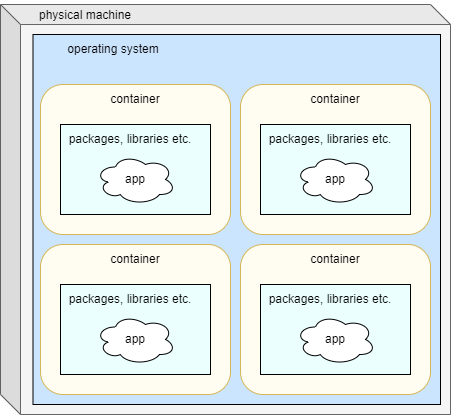

We needed a separate container for each of our microservices that would be still well isolated from other containers, but we couldn't afford to install a full operating system on each one of them. And definitely, not manually. Docker emerged.

What is Docker?

Docker is an open platform and a set of tools that allow the creation, management, and running of containers. Docker comes in handy in the process of building, shipping, and running applications in different environments like development and production by providing a consistent and repeatable process.

What is a Docker image and container?

The idea behind Docker is simple and it is a natural evolution from virtual machines. If you have many VMs on a single host, you have to install a full operating system on each one. Having several VMs on a single host utilizes a lot of resources just by these operating systems. Why not find some basic common elements that are needed by each VM and make only one instance of it? That is how Docker containers were invented.

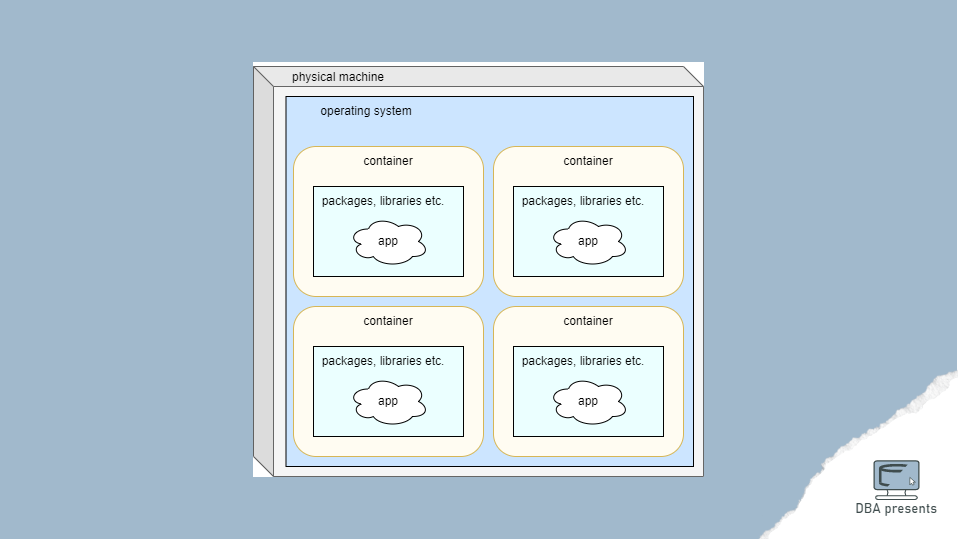

A Docker container is an isolated part of the host system. It has its own operating system, packages, libraries, and other software, but it utilizes several elements of the host system to minimize resource consumption. Those shared elements are:

- Kernel - the host operating system shares its kernel with Docker containers, so system calls and resource management are common in all containers on the host.

- File system - all containers use the same file system of the host. As one container should not read files of other containers, there are isolated file system layers created using the Union File System to establish a logical separation between containers.

- Network - the network also is shared by the host to all containers by default. If necessary, it can be configured differently.

- Hardware resources - containers share the same physical resources: CPU, memory, and disk space. As in the case of other elements, one container should not affect other containers, each container may have a limit configured or even a specific amount of resource allocated to it.

- Environment variables - containers can access host environment variables, but they can also have their own or override them for their only use.

- Time - the host system shares its time with the containers. However, the containers may have different time zones configured.

The goal of having multiple Docker containers in a single host system is to share as much as possible while keeping containers isolated from each other. Thanks to that, they are much lighter than virtual machines, but they still act as separate entities.

Docker containers can be run in AWS using ECS or EKS.

A Docker image is a lightweight, standalone, executable package that contains everything needed to run a piece of software, including the code, runtime, libraries, dependencies, and system tools. It encapsulates an application and its environment, ensuring consistency and reproducibility across different computing environments. In other words, it describes how to create a container. That description is prepared by a software developer or a DevOps. An image is deployed in a Docker tool, which creates a separate container for it.

Docker tools

Docker tools were created to make the whole process simple and repeatable from the image creation to running a container. Here are the most important ones:

Docker Engine is a core and the foundation of the Docker technology. It enables containerization. The heart of it is a server that manages the containers. It has REST API and CLI that users can use and other tools to interact with Docker.

As containers were invented to host microservices, usually there are many containers in a single environment. Docker Compose helps define and script in a YAML file the Docker management in a multi-container environment like services, networks, and volumes.

Docker Swarm lifts everything a level up. It provides Docker management on a larger scale which is: load balancing, scaling, rolling updates, and monitoring. Thanks to it, you can configure the rules, when a new instance of a container should be created to provide greater availability or performance.

In every organization where Docker is heavily used, many images are created and managed. Docker Hub is a place where they can be stored, managed, and versioned.

Docker Desktop is a desktop tool with a GUI that wraps other Docker tools in a more user-friendly application.

Docker Scout helps to deal with security vulnerabilities. It monitors images in Docker Hub for software that has known security issues and reports them.

Docker Build takes care of everything needed to create an image.

Summary

Docker technology's popularity is rapidly growing. It is no surprise knowing how popular microservices are. In this article, I described the history, general concept behind Docker, and a few tools that allow containerization. Bear in mind, that these are only Docker tools. There are many more similar ones that also support containerization.