There is a setting on a database level called PAGE_VERIFY. It defines a method of page verification - how data pages are verified by SQL Server engine to make sure they are not broken. Starting from SQL Server 2005 three options are allowed: NONE, TORN_PAGE_DETECTION and CHECKSUM. Although Microsoft defined CHECKSUM as default and as a best practice, more work by a database engine needs to be done which may cause performance degradation.

There is a setting on a database level called PAGE_VERIFY. It defines a method of page verification - how data pages are verified by SQL Server engine to make sure they are not broken. Starting from SQL Server 2005 three options are allowed: NONE, TORN_PAGE_DETECTION and CHECKSUM. Although Microsoft defined CHECKSUM as default and as a best practice, more work by a database engine needs to be done which may cause performance degradation.

For those of you who administer databases created on SQL Server 2000 and still have not switched PAGE_VERIFY to CHECKSUM, I would like to present results of performance test that I performed.

Options

Storage where SQL Server stores files can get broken in many different ways. A DBA is lucky if it is reported explicitly by OS. Unfortunately, some damages can remain invisible for Operating System and not get reported to SQL Server. A broken data page stored on a disk can be readable but some bits can have incorrect values. If it remained unnoticed, SQL Server would return wrong data for queries that need this page. A user would not notice there is something wrong and the problem could be uncovered for a long time. The longer it is uncovered the higher cost of fixing it is.

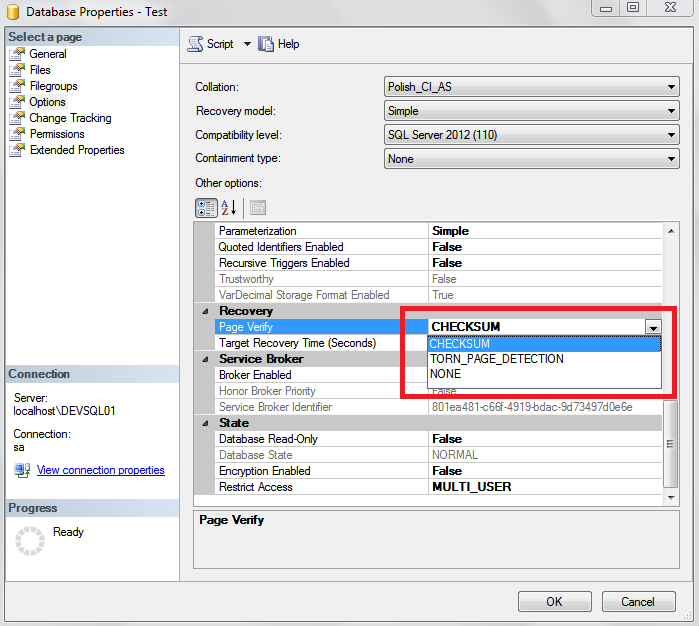

To prevent or at least reduce the risk of this problem, Microsoft implemented page verification methods on SQL Server engine level. The method can be chosen on Database Properties window on Options page.

Starting from SQL Server 2005 there are three options available:

NONE

When this option is used, SQL Server does not verify correctness of pages read from storage. From obvious reasons it generally should not be used. Even with extremely reliable storage devices and hardware configuration it is not a good choice. Any device may fail, it is better to know about it immediately.

TORN_PAGE_DETECTION

It was the default choice in SQL Server 2000. In this algorithm, for each page some bits are additionally stored in the page header during write operation. When the page is read from a disk during read operation, bits from the page header are compared to those in the page body. If a discrepancy is detected, the page is considered broken and the problem is reported to a user. It causes some small data duplication but it is very low - only small number of bits are duplicated. It is good because it does not consume much space, but unfortunately it does not detect all errors. If a broken bit is one of those that were not duplicated in the page header, the damage is not detected.

CHECKSUM

It is the default since SQL Server 2005. During write operation a checksum is calculated from the entire page and the result is saved in the page header. When the page is read from a disk, checksum is calculated again and compared to the value in the page header. The idea is similar to TORN_PAGE_DETECTION - both calculate a verification value for a page and store the result in the page header, but CHECKSUM uses the whole page for calculation which TORN_PAGE_DETECTION only a small number of bits.

Comparing to TORN_PAGE_DETECTION, it provides much better error cases coverage which makes it more reliable and sensitive for IO errors.

Performance

It is easy to guess, option NONE has no negative impact on performance of IO operations. TORN_PAGE_DETECTION requires copying some bits to the page header during writing and comparing them during reading. In theory, from all three page verification options, CHECKSUM should have the most significant impact as it requires calculating checksums for all IO operations. To verify these statements, I have performed a serious of tests.

Test description

I created a script which executed a SELECT query and returned one row by Primary Key then inserted a new row into the table. Both operations were executed in a loop one million times. It looked like below:

set nocount on

declare @numberOfTrans int = 0

declare @transID int

declare @startTime datetime

declare @value money

set @startTime = getDate()

while @numberOfTrans <= 1000000 begin

select @value = value + 1

from transactions

where trId = @numberOfTrans - 10000

if @value is null

set @value = 0

insert into transactions (userID, creationDate, value, tax)

values (1, getDate(), @value, null)

set @numberOfTrans = @numberOfTrans + 1

end

Test results

The script was executed against databases with PAGE_VERIFY set to NONE, TORN_PAGE_DETECTION and CHECKSUM. I measured amount of time needed for database engine to complete in each case. The test was repeated for each option 30 times. The results can be found in a table below:

| Test id | Script duration [s] | ||

| NONE | TORN_PAGE_DETECTION | CHECKSUM | |

| 1 | 991 | 1068 | 971 |

| 2 | 1010 | 979 | 1061 |

| 3 | 945 | 978 | 961 |

| 4 | 932 | 924 | 987 |

| 5 | 1041 | 924 | 1002 |

| 6 | 899 | 1262 | 1053 |

| 7 | 960 | 1042 | 923 |

| 8 | 971 | 964 | 1083 |

| 9 | 968 | 968 | 984 |

| 10 | 1070 | 1028 | 946 |

| 11 | 1063 | 981 | 973 |

| 12 | 998 | 1097 | 1174 |

| 13 | 1009 | 1972 | 1044 |

| 14 | 1248 | 1511 | 1297 |

| 15 | 1430 | 3231 | 2552 |

| 16 | 1776 | 1474 | 1378 |

| 17 | 1284 | 1261 | 1420 |

| 18 | 1262 | 1258 | 1197 |

| 19 | 1049 | 941 | 1128 |

| 20 | 1141 | 1280 | 1270 |

| 21 | 1133 | 1099 | 1384 |

| 22 | 1057 | 968 | 1577 |

| 23 | 1384 | 1210 | 1214 |

| 24 | 1067 | 1033 | 1159 |

| 25 | 989 | 1044 | 1116 |

| 26 | 961 | 973 | 930 |

| 27 | 1283 | 1031 | 967 |

| 28 | 1286 | 1102 | 1060 |

| 29 | 1107 | 1059 | 993 |

| 30 | 934 | 1085 | 983 |

| Average | 1108.267 | 1191.567 | 1159.567 |

Average times are not much different. TORN_PAGE_DETECTION seems to slow down IO operations a little comparing to NONE and CHECKSUM which can be surprising but the difference is so small that it can be qualified as measurement error. Not surprising is that the script executed fastest when NONE was chosen.

I have repeated the test a few times and the result was generally similar - not much difference in average execution times. CHECKSUM has not shown up as slower than TORN_PAGE_DETECTION.

Based on my test I have no doubts to switch my databases to CHECKSUM. Nevertheless, I recommend to make your own tests that best match your case.

Making the switch

Switching a database from TORN_PAGE_DETECTION to CHECKSUM is easy and quick. It can be done by running the following statement:

alter database [TestDb] set page_verify CHECKSUM

This command is lightweight and executes quickly. It is so, because nothing is done with already existing pages. There is no calculation of checksums and updating pages involved. Each page has its own marker which defines which method is used for the page. If a page is read from a disk, database engine checks the page header for a page verification method and verifies data accordingly to an algorithm. During write operation when a page is writen to a disk, it is stored with a checksum in the page header.