Besides exchanging JSON objects in a request or a response body, REST API can also use files. Doing so is not a big deal when the files are small. Things get a little bit more complicated when we have to respond with a big file. No one wants to keep in memory a few hundred megabytes of data waiting on the client to slowly download it. It must be streamed.

Recently, I posted an article on how to send a file over REST API. This time, we will discuss it focusing on using large files.

I do not want to commit a big file to a code repository, so I create such a file in a controller constructor. This is purely for demonstration purposes. In real life, you will have the file from other sources depending on your case.

@RestController

@RequestMapping("largefiles")

public class LargeFileController {

private static final String LARGE_FILE_PATH = "c:/temp/largefile.txt";

public LargeFileController() throws IOException {

createLargeFileOnDisk();

}

private void createLargeFileOnDisk() throws IOException {

File file = new File(LARGE_FILE_PATH);

try (FileWriter writer = new FileWriter(file)) {

for (int i = 0; i < 10000000; i++) {

writer.write(i + " qwertyuiopasdfghjlzxcvbnm\n");

}

}

}

}

When the controller is initialized, the constructor is executed. Which in turn, calls the createLargeFileOnDisk method. The method creates a file on disk and writes 10 millions of rows to it with some nonsense text. On my setup, it results in about 340 MB file on disk, which can be used for the demo.

Sending file as ByteArrayResource

One of the methods to return a file is to use ByteArrayResource. This is done by the following code.

@RequestMapping("/bytearray")

public ResponseEntity<ByteArrayResource> getByteArray() throws URISyntaxException, IOException {

URI uri = new URI("file:///" + LARGE_FILE_PATH);

File file = new File(uri);

Path path = Paths.get(uri);

HttpHeaders headers = new HttpHeaders();

headers.add(HttpHeaders.CONTENT_DISPOSITION, "attachment; filename=receivedFile.txt");

return ResponseEntity.ok()

.headers(headers)

.contentLength(file.length())

.contentType(MediaType.APPLICATION_OCTET_STREAM)

.body(new ByteArrayResource(Files.readAllBytes(path)));

}

It is a method mapped to a /largefiles/bytearray URL. It reads all bytes from the file, creates an instance of a ByteArrayResource object, and populates it with the file content. You can imagine that the whole file is loaded into the JVM memory. Then, a ResponseEntity object is constructed with the resource as the body and returned over REST. However, we can guess that each request will have a significant impact on memory usage; we can easily test it.

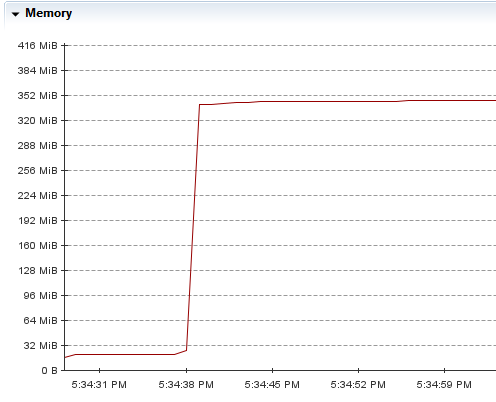

The above image is a screenshot from Azul Mission Control, which equivalent of JDK Mission Control for Azul JDK, which I used for this project.

We clearly see that the idle application was fine with about 30 MB of memory, but when I sent a request to get the file, the memory usage grew to about 350 MB. This is not a desired behavior. With many large files being served over the REST and many simultaneous requests, the application will require a huge amount of memory.

Streaming large file through REST

A good alternative that solves the memory problem is streaming the file. We can take advantage of another type of resource - InputStreamResource.

@RequestMapping("/streamresource")

public ResponseEntity<Resource> getStreamResource() throws URISyntaxException, IOException {

URI uri = new URI("file:///" + LARGE_FILE_PATH);

File file = new File(uri);

Resource resource = new InputStreamResource(new FileInputStream(file));

HttpHeaders headers = new HttpHeaders();

headers.add(HttpHeaders.CONTENT_DISPOSITION, "attachment; filename=receivedFile.txt");

return ResponseEntity.ok()

.headers(headers)

.contentLength(file.length())

.contentType(MediaType.APPLICATION_OCTET_STREAM)

.body(resource);

}

In this approach, we do not read the whole file, but open a FileInputStream instead. Then, it is used for constructing InputStreamResource. Which is finally returned as a body of ResponseEntity.

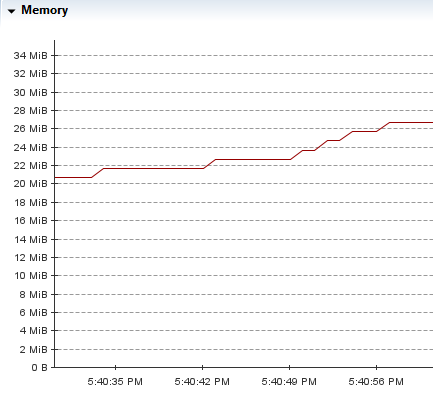

The main difference of this solution to the previous one based on a byte array, is that here, the file is not read at once, but read partially and streamed to the client. As a side note, I can mention that the returned resource is a Spring object and it will be closed by Spring once the whole streaming ends. Wait. Did we forget about confirming low memory consumption?

The above chart presents memory usage. Somewhere in the middle the file was streamed to a web browser. Now, I cannot even tell at which point exactly. The memory usage never grew higher than to something about 27 MB. Precise numbers do not matter as they may be dependent on other activities. The point is - it is better for JVM memory to stream files over REST rather than sending them as byte arrays.